AI Hallucinations

AI Hallucinations

I want to talk about AI.

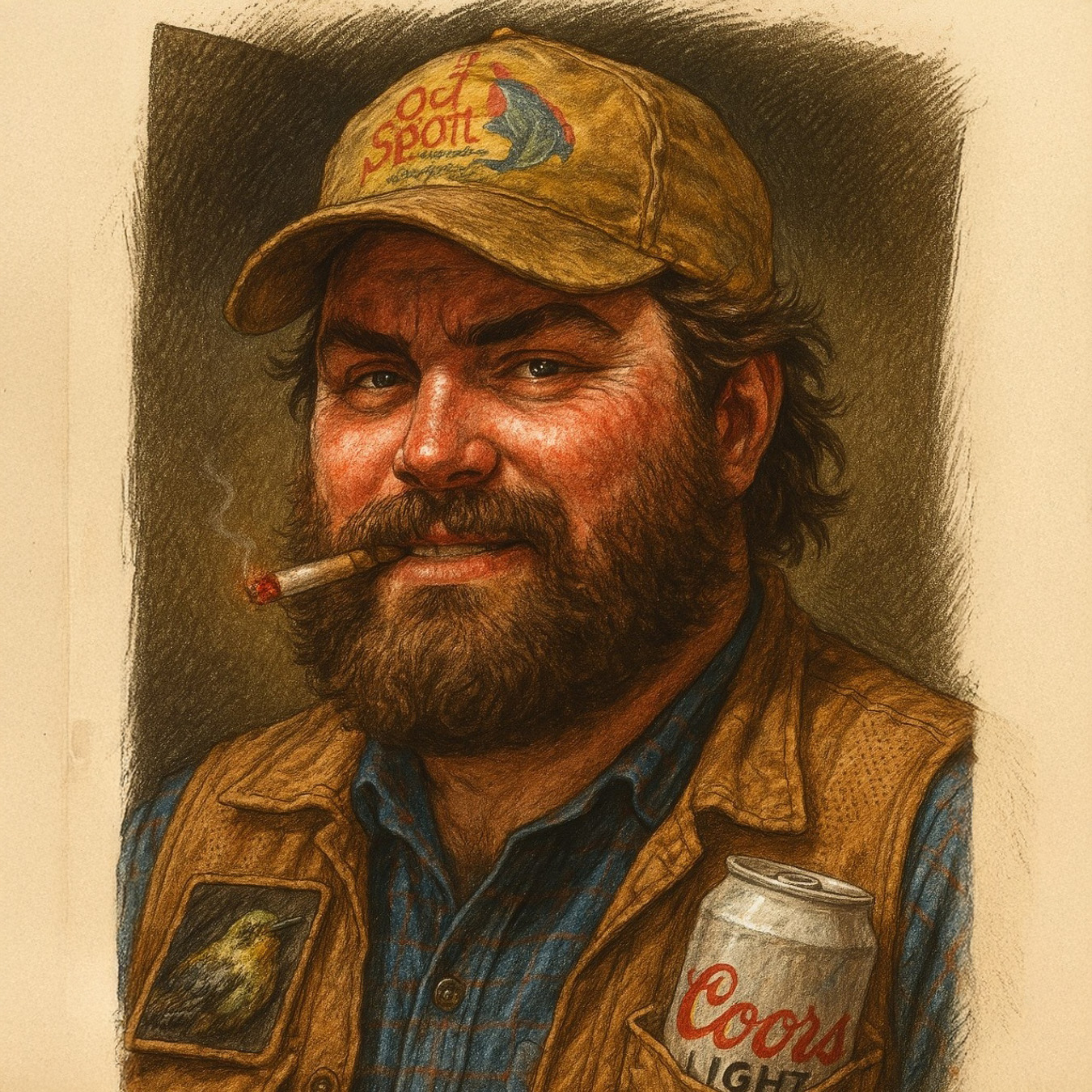

Our correspondent, Jeb, is a natural outdoorsman. Whereas I’m more of an indoorsman. I observe. I report. I try to find the patterns in the little things you can notice if you’re paying attention, from both inside and out.

AI is the biggest thing in the world right now. Everyone’s jumping on the bandwagon, using it for all kinds of stuff. But what is AI, really? We talk about it like it’s this advanced, unknowable tech, but at the same time, we oversimplify it too.

At its core, AI is just an algorithm.

A glorified chatbot.

Behind the scenes, AI is just layers of code and examining huge amounts of data and trying to make sense of it. It builds pathways, connections, patterns. When we’re talking about tools like ChatGPT or Grok, we’re talking about LLMs or Large Language Models. These models are trained on massive collections of text (and sometimes images, audio, whatever but we’ll stick to text for now). They analyze that data and start building patterns through it. That’s the “training.” It’s all statistical analysis and pattern recognition.

And that’s how they generate responses. When you ask something like “What should I make for dinner?” the LLM isn’t thinking. It doesn’t know what dinner is. It’s processing words as numbers, and feeding those numbers through a flowchart where different words have different weights and attention until it spits out something that sounds like a human reply.

So when you ask, “What’s in a BLT?” it breaks that sentence into tokens, maps them to numbers, runs that through its internal logic, and pulls out things like “Bacon,” “Lettuce,” and “Tomato” because those show up statistically near that phrase across thousands of recipes and sandwich blogs. It grabs snippets and words from those blogs and recipes and reassembles the

All of this seems straightforward enough.

But then there’s the weird part.

Sometimes, these models hallucinate. That’s the technical term. It means they say something that’s totally wrong or makes no sense, for no clear reason. And here’s the thing; nobody really knows why it happens.

There are theories, of course. Maybe the model is pulling from incomplete data. Maybe it starts predicting one word, then that leads to another, and suddenly it’s off the rails. Maybe it's misinterpreting the structure of what it was trained on. All possibilities. But the uncomfortable truth is that we don’t really know. These systems are so big, and so complex, that even the people building them can’t trace every decision they make.

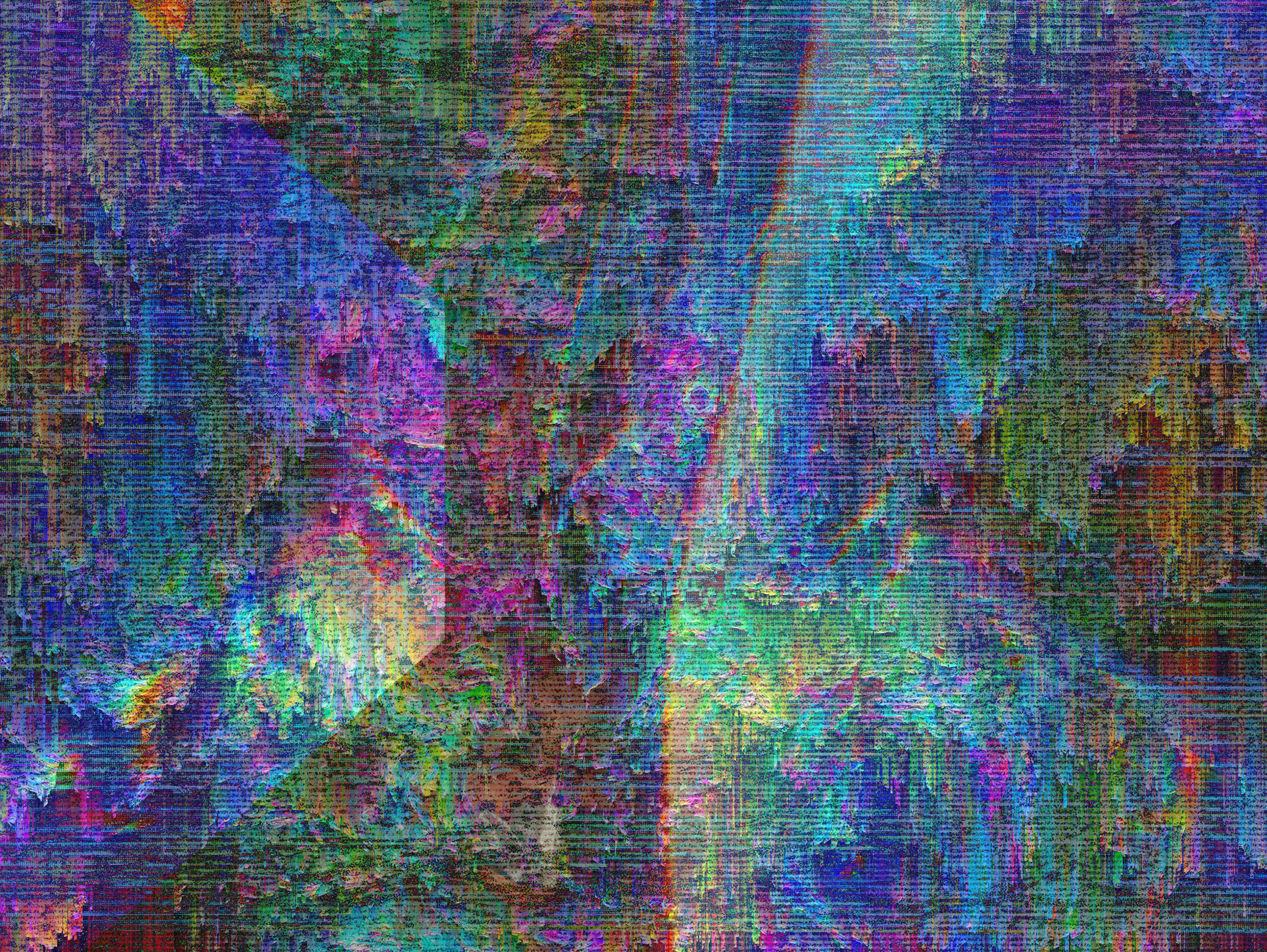

What if these hallucinations aren’t just glitches? What if they’re signals? What if, in digging through the entire internet; our histories, our stories, our memories, these models are connecting things we can't? Seeing shapes in the noise we’ve forgotten how to notice?

What if the hallucination isn’t the bug? What if it’s the ghost?

I’m not saying these things are becoming sentient. Not really. But maybe, by reflecting back all the data we’ve fed them, they're picking up the residue we didn’t mean to leave behind. The echoes. The shadows. The stuff we forgot we buried.

The ghost in the machine isn’t some digital spirit or rogue consciousness. It’s us. It’s humanity. Our fears, our biases, our ideas, our myths; sifted through a statistical net and flung back at us in a strange new shape.

AI doesn’t invent the future. It just reflects the past. And maybe that reflection is a little too clear.